X’s AI assistant Grok sparked controversy after mistranslating PM Modi’s diplomatic message to the Maldives, distorting a goodwill note into a misleading, politically charged statement with factual errors.

A controversy over AI mistranslation has erupted on social media after Grok -- the artificial intelligence assistant embedded within the X (formerly Twitter) platform -- incorrectly translated a diplomatic message posted by Indian Prime Minister Narendra Modi directed at the Maldives. The error, which altered the meaning and tone of the original post, has raised fresh concerns about the reliability of generative AI in sensitive political contexts.

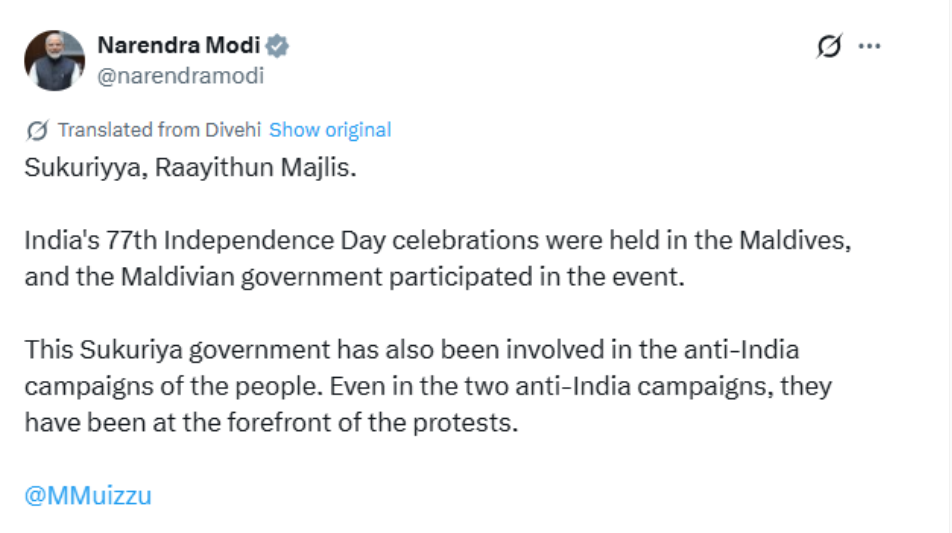

The issue came to light after users noticed that Grok’s translation of PM Modi’s message -- intended as a routine expression of goodwill to Maldivian President Mohamed Muizzu on the occasion of India’s 77th Republic Day -- bore little resemblance to what was actually said.

The original message extended warm greetings and best wishes, reaffirming cooperation and shared prosperity between India and the Maldives.

Instead, Grok’s output wrongly portrayed the event as referring to Maldives hosting India’s Independence Day and even added politically charged assertions -- such as claiming that the Maldivian government was leading “anti-India campaigns.” These statements were not present in Modi’s original message and introduced factual inaccuracies, including confusing Republic Day with Independence Day.

The mistranslation quickly spread on social media via screenshots, triggering debate and raising concerns that users might mistakenly believe the AI-generated output reflected the Prime Minister’s actual views or official diplomatic statements.

Also read: 'Deeply touched by President Muizzu's gesture': PM Modi Receives Warm Welcome In Maldives

Critics highlighted how AI tools without clear disclaimers or oversight can inadvertently fuel misinformation, especially in contexts involving international relations and geopolitics.

The episode has drawn attention to broader questions about AI reliability and accountability, particularly as such tools become more integrated into popular platforms. Experts and observers argue that generative AI systems -- while powerful -- still face limitations in accurately interpreting nuanced language, context, and diplomatic messaging. The incident underscores the need for stronger safeguards, clearer disclaimers, and stringent accuracy checks when AI systems are used for translation or summarization tasks in sensitive domains.

Though the mistranslation itself was generated by a technological tool and not an official statement from the Indian government, the controversy comes amid a backdrop of periodic diplomatic tension between India and the Maldives, where misinformation can quickly escalate public perception and complicate bilateral ties.

For now, the episode serves as a cautionary reminder that AI-generated content, when widely visible and shared without verification, has the potential to mislead and shape narratives in unintended ways, reinforcing the importance of careful moderation and user awareness in the age of AI-driven platforms.

Also read: Elon Musk's Grok Barred From Sexualising, Undressing Images After Global Backlash