Google’s Project Genie allows users to generate immersive digital worlds instantly with simple text prompts or images, then navigate them in real time.

- Project Genie is an early-stage research model designed to build living, interactive worlds.

- The tool relies on Google’s Genie world model, which simulates physics, movement, and object interactions.

- Unity CEO says that these tools expand creative possibilities but are not yet ready to replace traditional game engines.

Unity Software Inc. (U) stock slid sharply on Friday after tech giant Alphabet Inc.’s (GOOG, GOOGL) Google unveiled a new AI-driven tool that could change how virtual environments are created.

The initiative, known as Project Genie, allows users to generate immersive digital worlds instantly, using simple text prompts or images, and then navigate those environments in real time.

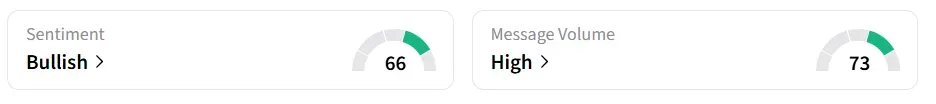

Unity, known for its widely used game development engine, saw its stock tumble about 21% by Friday mid-morning. However, on Stocktwits, retail sentiment around the stock jumped to ‘bullish’ from ‘extremely bearish’ territory the previous day. Message volume shifted to ‘high’ from ‘low’ levels in 24 hours.

Project Genie

Project Genie is an early-stage research model designed to build living, interactive worlds. Users can create characters, define landscapes, and explore environments that continuously expand as they move. The system generates scenery in real time, enabling exploration by walking, driving, flying, or riding through AI-built settings.

The tool relies on Google’s Genie world model, which simulates physics, movement, and object interactions while maintaining consistency across environments.

How Did The CEO React?

Unity’s CEO Matthew Bromberg took to the social platform X to reassure developers and investors, explaining how “world models” technology fits into Unity’s long-term strategy.

In his post, Bromberg said that these tools expand creative possibilities but are not yet ready to replace traditional game engines. He added that while results from the models can appear sophisticated, the outputs remain probabilistic, meaning they can change unpredictably with each interaction.

That lack of consistency makes them difficult to use in games that require precise mechanics and repeatable player experiences, Bromberg said.

“Rather than viewing this as a risk, we see it as a powerful accelerator. Video-based generation is exactly the type of input our Agentic AI workflows are designed to leverage—translating rich visual output into initial game scenes that can then be refined with the deterministic systems Unity developers use today.”

- Matthew Bromberg, CEO, Unity

U stock has gained over 32% in the last 12 months.

For updates and corrections, email newsroom[at]stocktwits[dot]com.<