Google I/O 2024: Project Astra is now 'universal AI agent', can read anything through phone's lens; WATCH

Synopsis

Google's Project Astra, a new AI agent, answers queries in real time via text, audio, or video inputs. It identifies objects, explains code, finds items, and suggests names for a dog. Astra's capabilities will be integrated into Gemini app via Gemini Live interface.

The keynote at Google I/O 2024 was, unsurprisingly, all about AI. The company has a lot of catching up to do with OpenAI now taking ChatGPT to the 4o version earlier this week. The I/O 2024 keynote demonstrated the work Google has been doing behind the scenes with the assistance of the Google Deepmind AI team.

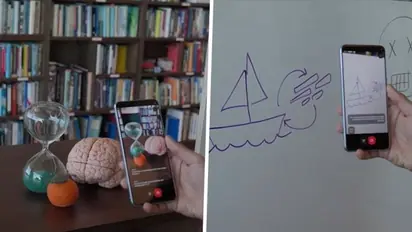

One of the products to emerge from the lab is Project Astra, a next-generation AI assistant that claims to integrate AI into mobile devices through spatial understanding and video processing to provide correct information.

Google's approach, which is built on Gemini, is essentially a way of telling OpenAI that we are ready for fight. So, how does this version of the AI Assistant operate? Google uses your phone's camera to instruct the AI assistant and help you grasp what's around you. Here's a small demonstration that Google displayed during the keynote.

It can even read code written on a PC and assist you in determining its purpose or solving complicated codes. That's not all; you can point the camera towards the street and ask the AI assistant to tell you where you are and provide more specific information if necessary.

Moreover, Google showcased the use of Project Astra being used via a smartphone or smart glasses, suggesting that there could be a major Gemini powered revamp to Google Lens down the road. Google says Project Astra is able to process information faster by encoding video frames, combing video and speech input into a timeline of events and caching all this information for recall.

Google said these Project Astra features will be accessible in Gemini Live, the primary app, later this year. The technology will first operate on Pixel phones, but Google hopes to expand the AI helper to include smart glasses and TWS earbuds in the future.

Find the latest Technology News covering Smartphone Updates, AI (Artificial Intelligence) breakthroughs, and innovations in space exploration. Stay updated on gadgets, apps, and digital trends with expert reviews, product comparisons, and tech insights. Download the Asianet News Official App from the Android Play Store and iPhone App Store for everything shaping the future of technology.